The Read/Write Reality workshop ended a few days ago: it has been an incredible experience! A really innovative and fun project with a wonderful crowd with different backgrounds, approaches, desires and attitudes.

Here is some information about the workshop and its next steps.

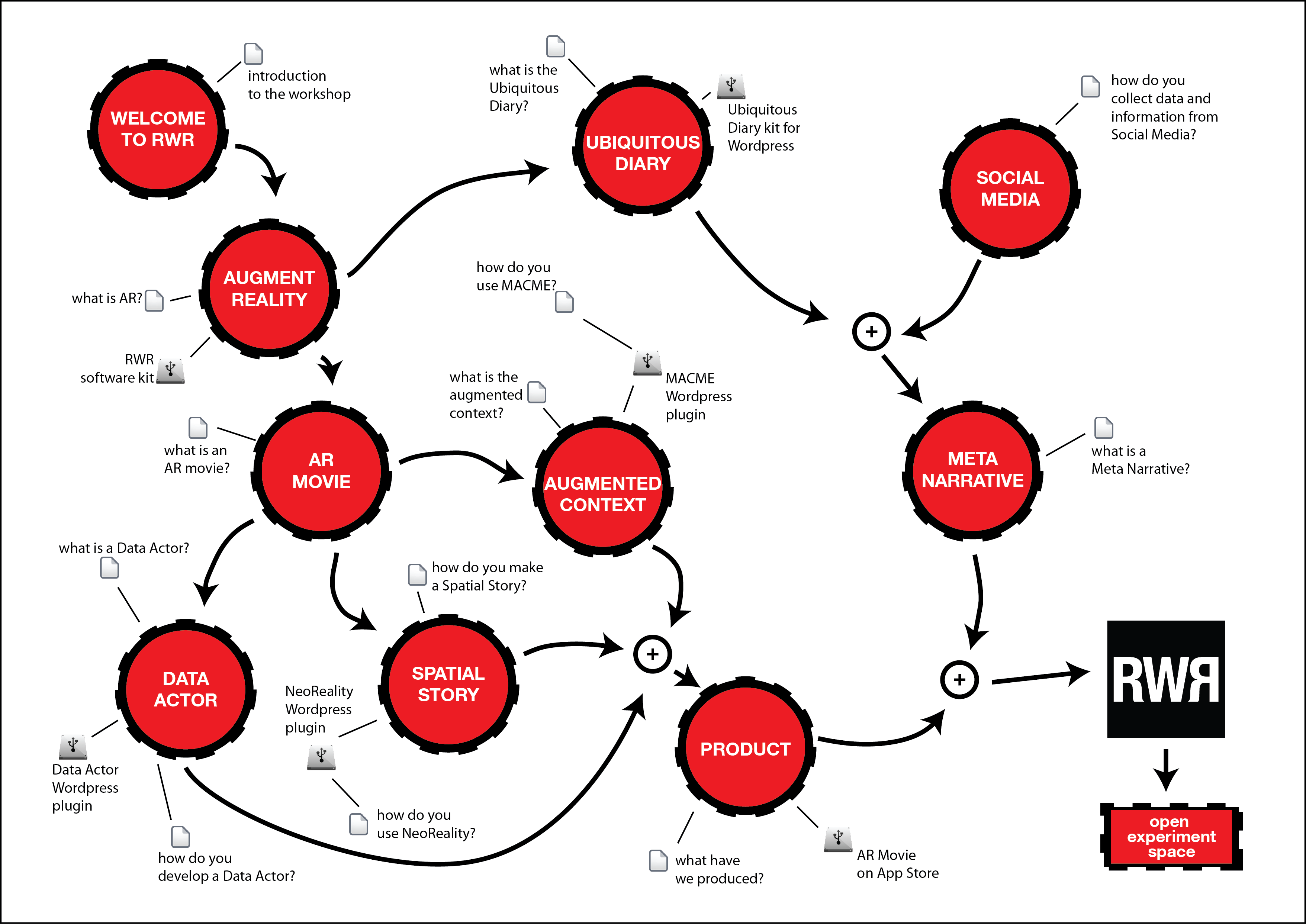

The infographic at the top of this post shows the main structure of the workshop. During RWR experience, reflection, discussion, scenario visualization and conceptualization and pragmatic design and development activities mixed in the creation of an intense and challenging experience for both participants and organizers.

The workshop started with an introduction to the themes of the Augmentation of Reality, describing the approaches which we use in the creation of digital layers of information, expression and communication which are seamlessly integrated with the physical world and which allow for independent, free, autonomous representation and enactment of our points of view on reality.

We then moved on to more technical and philosophical subjects, exploring the multiple definitions which can be given to the concept of Augmented Reality, starting from the more philosophical ones emerging from situationist practices and harmoniously arriving to the ones which are more oriented to art, business, activism, marketing and communication.

The idea of being able to create additional layers of accessible information stratified on top of our ordinary reality, expressing multiple points of view and approaches to the world, became clear and suggested scenarios and ideas.

We presented a complete software kit that has been used in the following parts of the workshop, including tools dedicated to the creation of AR applications for mobile devices and the development frameworks that allow for the creation of ubiquitously accessible content.

The workshop’s objective was to understand the possibilities offered by ubiquitous publishing techniques and methodologies and to produce an end-to-end project that would have been published at the end of the workshop.

Thus, we introduced the project to be developed: an Augmented Reality Movie!

The Augmented Reality Movie

We introduced the idea of being able to create a new kind of cinematographic experience. Classic Cinema proposes a linear storyline (even in those cases in which directors and screenwriters experiment, as in movies such as Memento or Inception, the experience offered by Cinema is a linear one) which is the product of a single point of view: the movie camera is a single eye onto a time/space using characters, locations and events to propose a single perspective.

The idea of an Augmented Reality movie proposes a model which is radically different from this.

In an AR movie the storyline unfolds in non-linear ways, across a series of different points of view, in a time dis-continuum which can include synchronicity, atemporality, and simultaneity.

We described a possible scheme for our to-be-produced AR Movie, composed by the following elements:

- an Augmented Context

- a Spatial Narrative

- a Data Actor

The Augmented Context

We have defined the Augmented Context as the whole of those elements found in ordinary physical space which expose, signal and make visually accessible the existence of additional, augmented layers of digital information, content and interactivity.

To understand the necessity of creating an Augmented Context for our movie we can refer to the two basic scenarios of fruition of our production:

- people who have the mobile application of our movie

- people who don’t have the mobile application of our movie

While traversing the physical space people who don’t yet have the mobile app used to experience the AR Movie need to be visually alerted to become aware of the existence of augmented content in the space.

Even the people who do have the mobile app are not expected to walk with their smartphone constantly in front of their faces (try it! your arm will hurt in a few minutes, and you’ll look like a stupid cyborg :) ).

The Augmented Context serves the following two purposes:

- visually alert people of the existence of augmented content in a physical space

- provide contextual information and opportunities for interaction

The Augmented Context can be imagined under a variety of forms:

- stickers with QRCodes disseminated in the architectural space

- urban furniture and signage providing information and instructions on how to access the AR content

- stenciled QRCodes, sounds, signs, billboards and anything that can be imagined as placed in the physical space to offer information and awareness about the presence of the augmented content

The Augmented Context can be created to include QRCodes to link to:

- instructions on the access and usage of the mobile app needed to access the AR Experience

- background information on the theme of the movie (or other AR Content)

- realtime information coming from databases and/or social networks

- opportunities for interaction, allowing people to comment and describe their experience and to interconnect with other previous visitors

To create the Augmented Context we used the MACME platform created by FakePress Publishing and available under a GPL license from the WordPress Plugin repository and on the project’s GitHub page.

MACME allows using a WordPress CMS to create cross medial content, including text, video, sounds, and social media, and to automatically generate it in versions which are suitable for printing, attaching, packaging, putting on stickers, t-shirts and any objects or location onto which a QRCode can be attached.

The Spatial Narrative

The Spatial Narrative is where the “action” takes place.

Multiple subjects provide their own point of view on the story/subject/plot of the AR Movie. To do this they produce content designed to be placed in space using some form of AR Content Management System (CMS).

This scenario opens up an incredible amount of opportunities for investigation and discussion. Among these are:

- the idea of creating a narrative through a multiplicity of points of view

- the idea of creating a narrative which is not intended for sequential, linear viewing, and which is enacted each time in a different way according to how the viewer traverses space

- the idea of creating a narrative of a new kind, whose elements are thought to be experienced in a specific place or, even, at a specific time

- the idea of creating a narrative involving a massive number of points of views (a movie with 100 million actors! it could happen anytime soon)

- the idea of creating a narrative which is emergent (if I add another contribution to the AR movie today, the narrative changes accordingly)

- the idea of not creating a narrative, but a new form of expression involving spatially disseminated elements of expression, knowledge, information and interactivity (we could call it emergent environmental narrative, for example)

All these issues have been confronted while performing the workshop and different participants used very different approaches, even suggesting advanced scenarios involving peculiar approaches to time (narrative elements in the same space, accessible simultaneously, but referring to different instants of time across past, present and future) and to subjectivity and identity.

An issue that definitely emerged was the engagement of the territory. This kind of project suggests multiple ways in which positive effects could be created for the local inhabitants of the territory in which the AR movie takes place. The AR Movie has been experienced as a complex mixture of entertainment, tool for awareness, innovative tourism, instrument for local development, kowledge sharing framework, atypical marketing and more.

Workshop participants engaged local people into acting and expressing themselves, and an interesting experiment was also performed filming parts of the movie in a local traditional bakery in which the narration has been interweaved with the beautiful experience of the traditional production of Babà desserts.

To create the Spatial Narrative the NeoReality WordPress plugin has been used. NeoReality is a WordPress plugin that can be used to easily produce spatialized content: images, sounds, text and 3D objects can be placed in space (coordinates, height, orientation) using a visual editor; the plugin includes a ready to customize and compile iPhone/iPad application that implements an AR browser like the ones offered by Layar and Junaio. NeoReality is offered to the community as Open Source software distributed under a GPL3 license, and is accessible on Art is Open Source and on the GitHub project page.

The Data Actor

To highlight the idea of a movie built using a multiplicity of points of views, during the workshop we created the DataActor.

The Data Actor is intended as a peculiar actor in the movie, representing and giving expression to all the voices of social network users expressing themselves in realtime on the themes of the movie.

The Data Actor has been developed as a WordPress Plugin with specific integration features with the NeoReality Platform.

If installed alone, the Data Actor can be configured to be sensible to specific keywords on social networks such as Twitter, Facebook and FourSquare: as soon as the users of these social networks contribute their posts using these keywords, the content is immediately captured by the plugin. If this information includes geographic information (such as the geolocalized Twitter messages or the Facebook updates including Places information) they are placed on a map as geo-referenced content.

If installed on a WordPress CMS in which the NeoReality Plugin is also installed, the content created by DataActor is automatically included in the AR visualizations of NeoReality’s mobile app.

The DataActor, thus:

- automatically creates content on WordPress by capturing the public expressions of social network users on a series of keyworkds and phrases

- if this content can be geo-referenced, it includes it in the NeoReality AR experience

This possibility has been proven really successful with all workshop participants, who were imagining a series of scenarios in which User Generated Content could be automatically used to enrich our experience of cities and of physical spaces, including commerce, knowledge, education, activism, politics, art and communication.

One very interesting concept emerged while collaboratively creating the DataActor plugin during the workshop: the possibility to use our visual space as a digital interface. The scenario we developed together with participants engaged our visual space in defining novel usage grammars for it. As an example, the scenario we developed involved placing content for kids in the lower part of our visual space, between 70cm from ground to 1.2 meters from ground, all the AR Movie content between 1.2 meters and 5 meters from ground, and the content produced by the DataActor above the 5meters limit. This concept has proven to be really interesting in the idea of developing accessible, usable AR content, and will be further explored in the near future.

The DataActor will be released together with the rest of the materials of the RWR workshop in the next few days.

The AR Movie

We put everything together and, on the last day, we produced the AR Movie.

The movie catches onto the suggestion of the possibilities for synchronicity offered by the concept of Spatial Narrative and builds a very intriguing story: a few minutes before the End of the World (on December 21 2012 :) ) people start realizing that something is not right because coffee is finished.

The movie has already been published on the Apple App Store and will be available in a few days, as soon as the review process makes its course.

The Meta Narrative

RWR is a workshop about ubiquitous publishing and Augmented Reality. So we decided to create a meta narrative throughout it, by using ubiquitous publishing and AR tools.

Participants were given a kit which included a series of tools that could be used to create an Ubiquitous Diary.

The Ubiquitous Diary is composed by a set of QRCode stickers that can be attached anywhere: on objects, places, bodies, books…..

The first time one of these stickers is scanned, it allows users to create a content that will be associated to the sticker itself. The following scans will show this content and let other users comment it. The initial user can update their content either by scanning it again, or by using the WordPress website on which the system is based.

This very simple tool has proven to be very effective, with participants commenting on every space of the workshop and of the city and even creating a wonderful present for us at the end of the workshop: a map with attached several QRCode stickers of the Ubiquitous Diary. A really nice way to keep in touch in the future.

The Ubiquitous Diary, together with the Social Media stream captured using the Storify web tool formed a novel form of meta-narrative through which the workshop and its participants were able to look upon themselves, and interact, communicate, relate and share information, knowledge and emotions in all moments of the workshop and even after it.

Open Space for Experimentation

The RWR workshop will provide all participants with a set of tools and documentation (published in a few days from the date of this post, as soon as we tune in and get ready all its elements, which you can see represented on the infographic at the top of this post).

Among the tools an AR sandbox will be made available to all participants, to freely experiment on their ideas on how to use ubiquitous publishing tools and AR narratives. The sandbox will allow participants to use a fully setup system and to customize their apps, making experiments, prototypes and researches in an accessible, ready-to-use (and to destroy, if something goes wrong in the experiments… sandboxes are wonderful :) ) environment with all the tools introduced during the workshop already installed and configured.

Next Steps

In the next few days the full kit of materials will be published (including documentation, slides, software and how-to presentations and tutorials). You will be able to find it here on Art is Open Source and on the RWR website (you can take a sneak peek at the website as it evolves by clicking HERE)

The AR Movie has been already published on the Apple App Store and will be ready and reviewed in the next few days.

We are designing and planning the next steps of this wonderful and insightful experience.

Stay tuned for the incredible news about RWR!

Credits:

We wish to thank all participants of the workshop for creating such an incredible experience: we’ve learned just as much as you did! Thank you all!

The participants are:

Renato Alberti, Laura Alessandrini, Federico Auer, Anita Bačić, Halina Bause, Rolf Bindemann, Gianmarco Bonavolontà, Marica Buquicchio, Corrado Caianiello, Bruno Capezzuoli, Francesca Conti, Francesca De Chiara, Luigi Ferrara, Silva Ferretti, Gianluca Frisario, Alex Giordano, Verena Hermann, Salvatore Iaconesi, Melissa Leithwood, Mario Mele, Mauro Micozzi, Sergio Militti, Elisa Neri, Silvia Nitti, Derval O’Neill, Daniela Palumbo, Patrizia Pappalardo, Eleonora Parisi, Oriana Persico, Francesca Rossi, Alessandro Sabatucci, Ruben Santillan, Riccardo Schiano, Elena Spagnesi, Vincenzo Torsitano, Penny Travlou and Roberto Virtuoso.

Special thanks to Mr. Aniello and his “Pasticceria Leone” in Calvanico (Salerno) for his wonderful participation and the delightful Babà experience he treated us to :)

Thank you all so much! :)

RWR is a project by:

in collaboration with:

Centro Studi

Etnografia Digitale

with the support of:

Media Partners:

![[ AOS ] Art is Open Source](https://www.artisopensource.net/network/artisopensource/wp-content/uploads/2020/03/AOSLogo-01.png)