AOS will be in Macerata ( May 2-6 2014 ) at the Youbiquity Festival for a workshop in which we will understand how to create an ubiquitous soundscape and installation, to create an immersive geography of sound. “When you listen carefully to the soundscape it becomes...

AOS will be in Macerata ( May 2-6 2014 ) at the Youbiquity Festival for a workshop in which we will understand how to create an ubiquitous soundscape and installation, to create an immersive geography of sound. “When you listen carefully to the soundscape it becomes...

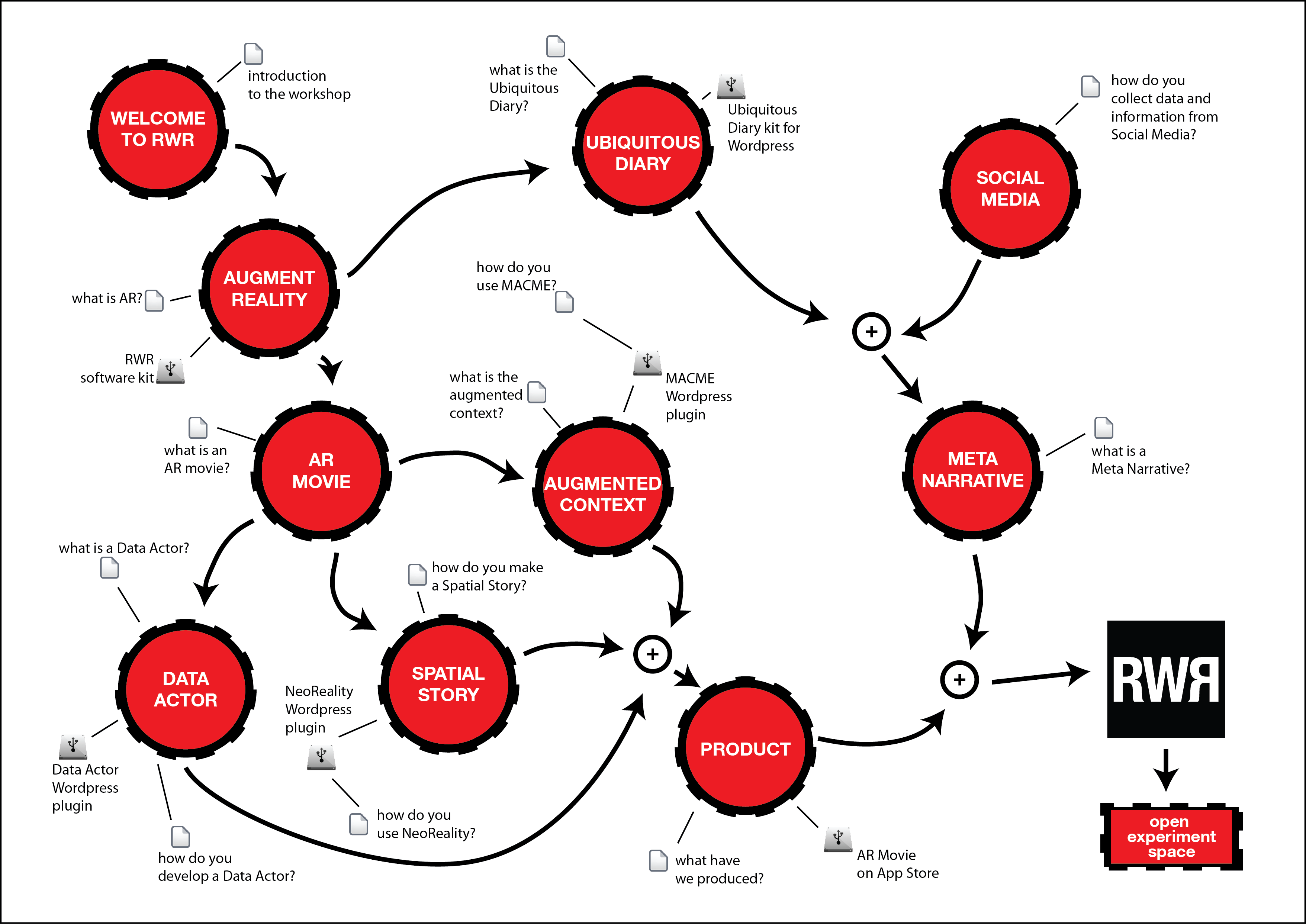

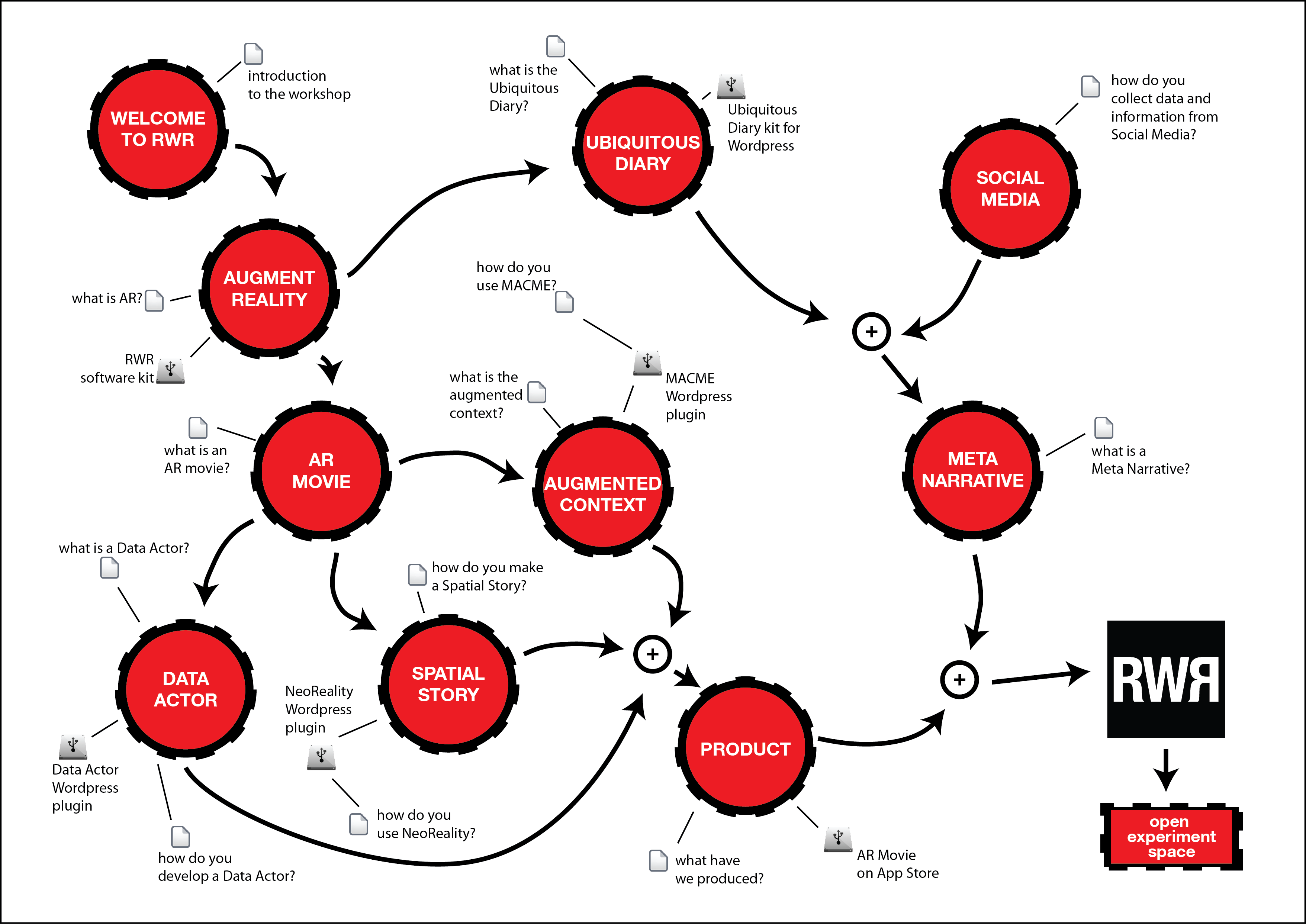

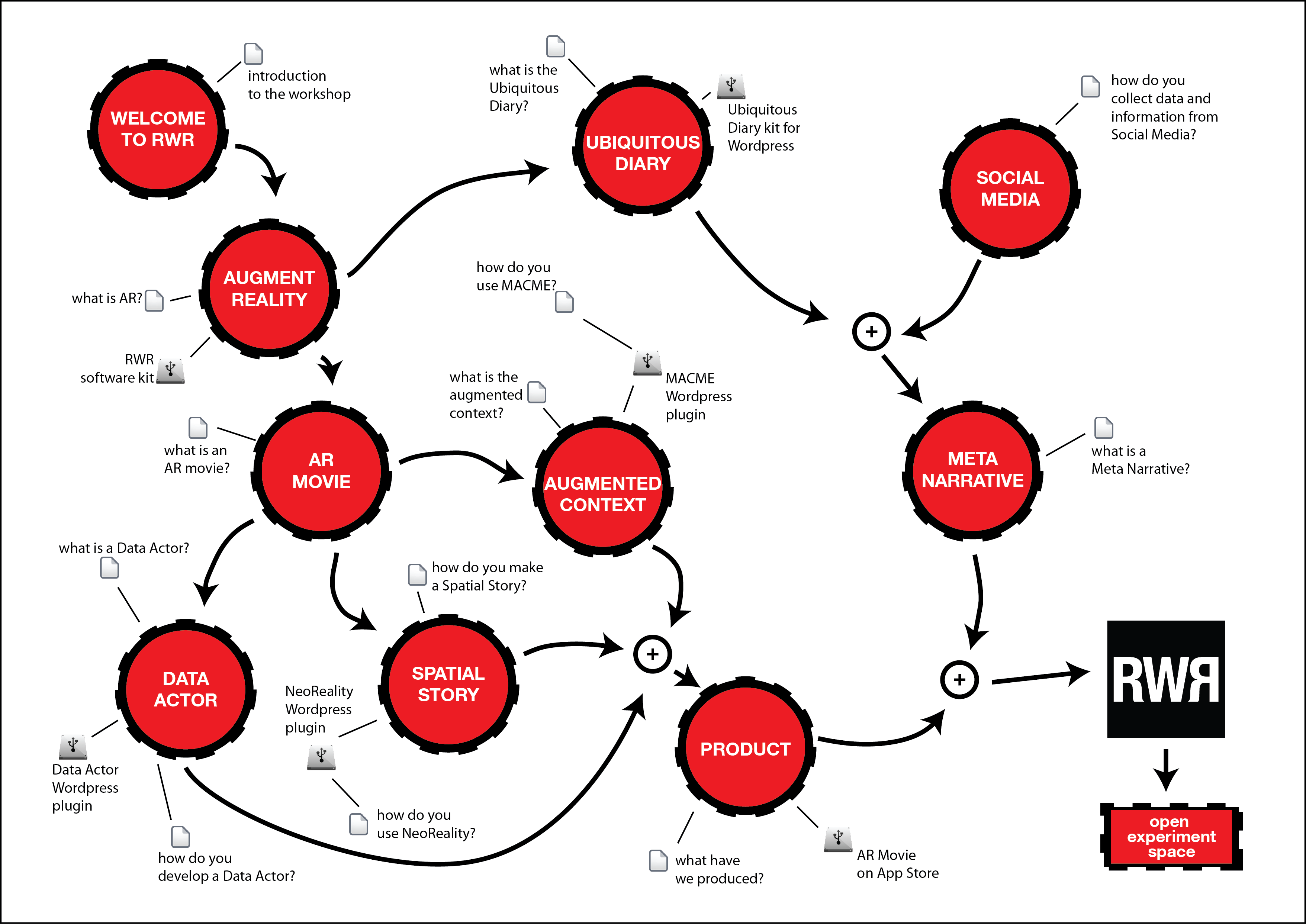

The Read/Write Reality workshop ended a few days ago: it has been an incredible experience! A really innovative and fun project with a wonderful crowd with different backgrounds, approaches, desires and attitudes. Here is some information about the workshop and its...

The Read/Write Reality workshop ended a few days ago: it has been an incredible experience! A really innovative and fun project with a wonderful crowd with different backgrounds, approaches, desires and attitudes. Here is some information about the workshop and its...